AI Regulation Trends: Your Guide to AI Policies in the US, UK, and EU

- GRC

- 03 July 24

Introduction

The rapid evolution of Artificial Intelligence (AI) and particularly Generative AI has opened up new opportunities, and prospects of development and inclusive growth. But, in the wrong hands, AI can unleash fraud, discrimination, disinformation, stifle healthy competition, disenfranchise workers, and even threaten national security. The United States of America, the European Union, and the United Kingdom have taken the first steps to regulate the development of AI with a strong focus on data privacy, transparency, accountability, security, and ethics.

Here is a quick overview of the key regulations being implemented in these three regions, highlighting the main points to note.

The European Union’s Artificial Intelligence Act

The European Parliament adopted the Artificial Intelligence Act in March 2024, which will be fully applicable 2 years after entry into force. The objective of this Act is to standardize a technology-neutral definition for AI for future reference. Furthermore, the Act aims to ensure that AI systems within the EU are safe, transparent, traceable, non-discriminatory, environmentally friendly and monitored by people and not automation. The law uses a risk-based approach, with different requirements based on the level of risk.

Risk level definition: It defines 2 levels of risk and states obligations for providers and users depending on the risk level:

Unacceptable Risk AI Systems - These are considered to be harmful for people and will be banned:

- Cognitive behavioral manipulation of people or vulnerable groups

- Social scoring or segmentation of people based on behavior, personal characteristics or socio-economic status

- Biometric identification and categorization

- Real time and remote biometric identification such as facial recognition programs

There are some exceptions and rules established for law enforcement agencies.

High Risk AI Systems - AI systems that can negatively impact fundamental rights and / or safety of people:

AI systems used in products covered by the EU’s product safety legislation, such as toys, aviation devices and systems, cars, medical devices and elevators.

AI systems in specific areas that have to be registered with an EU database:

- Systems used for managing or operating critical infrastructure

- Systems used for educational and vocational training

- Those used to employment, employee management and access to self -employment

- Those that are involved with access to and utilization of essential private and public services and benefits

- Law enforcement systems.

- Systems involving migration, asylum, border control management

- Those providing legal interpretation and application of laws.

High-risk AI systems will have to be assessed before they can reach the market and will be assessed throughout their lifecycle. EU residents can file complaints with relevant national authorities.

Transparency requirements – While the Act does not classify Generative AI as high risk, it mandates transparency requirements and compliance with EU copyright laws:

- Disclosures that state the content was generated by AI

- Designing the model to stop it from generating illegal content

- Publishing summaries of copyrighted data used for training

Supporting Innovation – The Act aims to help startups and small to medium businesses leverage AI with opportunities to develop and train AI algorithms before public release. National authorities have to provide companies with suitable testing conditions that simulate real-world conditions.

United Kingdom’s Response to the White Paper Consultation on Regulating Artificial Intelligence

In February 2024, the UK Government announced its response to the 2023 whitepaper consultation on AI regulation. Its pro-innovation stance on AI follows an outcome-based approach with a focus on 2 key characteristics – adaptivity and autonomy – that will guide domain specific interpretation.

It provides preliminary definitions for 3 powerful AI systems that are integrated into downstream AI systems:

- Highly capable GPAI – large language models fall into this category. These are foundational models that can carry out a wide range of tasks. Their capabilities can range from basic to advanced and can even grow to outpace the most advanced models in use currently.

- Highly Capable Narrow AI- these can carry out a limited range of tasks within a specific field or domain. These can also meet or outpace the most advanced models in use today within those specific domains

- Agentic AI – this is an emerging subset of AI technology that can complete numerous sequential steps over long periods of time using tools like the Internet and narrow AI models.

It sets out five cross-sectoral principles for regulators to use when driving responsible AI design, development, and application:

- Safety, security, and robustness

- Appropriate transparency and explainability

- Fairness

- Accountability and Governance

- Contestability and Redress

The principles are to be implemented on the basis of three foundational pillars:

- Working with existing regulatory authorities and frameworks – UK will not be instituting a separate AI regulator. Instead existing regulatory offices such as the Information Commissioner's Office (ICO), Ofcom, and the FCA, will implement the five principles as they oversee their respective domains and use existing laws and regulations. They are expected to quickly implement the AI regulatory framework within their domains. Their strategy must include an overview of the steps taken to align their AI plans with the principles defined in the framework, an analysis of AI-related risks, and an overview of their ability to manage these risks.

- Creating a central function for risk monitoring and regulatory coordination – The UK has set up a central function within DSIT to monitor and evaluate AI risks and address any gaps in the regulatory environment. This is because AI opportunities and risks cannot be addressed in isolation.

- Foster innovation via a multi-agency advisory service – A multi-regulatory advisory service, the AI and Digital Hub, will be launched to help innovators ensure complete legal and regulatory compliance before they launch their products.

Blueprint for an AI Bill of Rights by the White House Office of Science and Technology Policy

The White House Office of Science and Technology Policy has formulated the Blueprint for an AI Bill of Rights with five principles to guide the design, use, and deployment of AI systems. The includes:

Safe and Effective Systems

- Diverse communities, stakeholders, and domain experts should be consulted during the development of automated systems so that concerns, risks, and possible impact of the systems can be better identified

- Extensive pre-deployment testing, risk identification and mitigation, and ongoing monitoring to ensure safety and effectiveness

- Automated systems must be designed to proactively protect the American public from any negative impact due to unintended uses or impact of the systems. This includes inappropriate or irrelevant use of data in the design, use, and deployment of these systems

Algorithmic Discrimination Protections

- This happens when automated systems contribute to differential and unfair treatment of people based on their race, color, ethnicity, gender, medical condition, sexual orientation, gender identity, religion, disability or any other classification protected by law

- Designers and developers have to take proactive and continuous steps to protect the American public from such discrimination and ensure that systems are designed to be equitable

- This must include proactive equity assessments as part of the system design, use of representative data, protection against proxies for demographic features, ensuring accessibility for people with disabilities, disparity testing and mitigation, and organizational oversight

- It also recommends independent evaluation and plain language reporting

Data Privacy

- Built-in measures to protect against abusive data practices and default measures to ensure individual agency over how personal data is collected and used

- Designers, developers, and deployers of such systems must secure individual consent regarding the collection use, transfer, access, and even deletion of personal data

- Consent collection mechanisms must be brief and in easily understood language

- Data pertaining to sensitive domains such as healthcare, education, criminal justice, and finance, must be used only for necessary functions and be protected by ethical review and use prohibitions

- The American public cannot be subjected to unchecked surveillance and such technologies must undergo stricter oversight with pre deployment assessment of possible negative impact

- Continuous surveillance cannot be used in education, work, housing, or in other contexts where the use of such surveillance technologies is likely to limit rights, opportunities, or access

Notice and Explanation

- People must know when an automated system is being used and understand how it impacts them

- This must be communicated via plain language documentation with clear description of how the system works and its outcomes

- People must know when an outcome impacting them was determined by an automated system even when that system was not the only input determining the outcome

Human Alternatives, Consideration, and Fallback

- People should have the option of connecting with people to remedy any problems and should be able to opt out of automated systems to engage with a person instead

- Engagement with humans should be accessible, equitable effective and managed well with adequate operator training. It should not put additional burden on the public

- Automated systems in sensitive domains must be customized to provide meaningful access for oversight and include human consideration for high risk or negative decisions

In addition to these federal guidelines, several states are also formulating their own regulations. 17 states (California, Colorado, Connecticut, Delaware, Illinois, Indiana, Iowa, Louisiana, Maryland, Montana, New York, Oregon, Tennessee, Texas, Vermont, Virginia and Washington) have enacted 29 bills on AI regulation over the last five years.

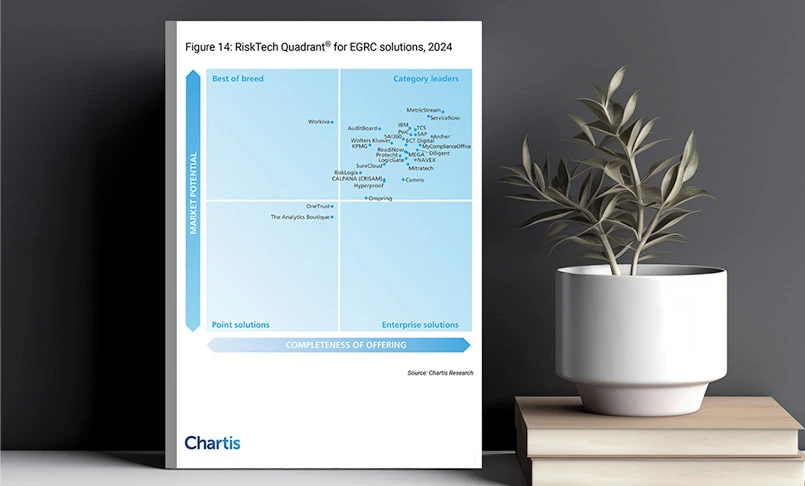

Stay Ahead with MetricStream

AI technologies are here to stay and the world has to learn to use them safely for the betterment of humanity. Regulations for AI development and use are critical to protect populations from bias, discrimination and breach of privacy. AI technologies are evolving at an unprecedented pace, and regulators across the world are following suit with quick updates or new frameworks. Organizations need automated compliance platforms to keep pace with this rapidly changing regulatory landscape.

MetricStream’s Compliance Management can simplify and fortify enterprise compliance initiatives amidst a rapidly changing regulatory landscape. Gain greater visibility into control effectiveness and quick issue remediation with streamlined:

- Mapping of regulations to processes, assets, risks, controls, and issues

- Identifying, prioritizing, managing, and monitoring areas of high compliance risk

- Performing control testing and monitoring

- Creating and communicating corporate policies

- Identifying, capturing, and managing regulatory updates

- Generating reports with drill-down capabilities

Even as compliance management is simplified and streamlined, it is important to have a mechanism in place to keep track of rapidly evolving regulations. MetricStream’s Regulatory Change Management platform is a centralized framework that can help organizations capture, curate, identify, extract, consolidate, and manage regulatory changes and updates sourced from diverse providers.

Find out more. Request a personalized demo today!