Taming OpenAI is a Shared Responsibility

- GRC

- 12 June 24

Introduction

It is almost an understatement today to say that the rise of Artificial Intelligence (AI) and Generative AI have been game-changers for businesses. In India, as many as 84% of CEOs are either securing new capital or reallocating funds from other budgets to finance GenAI, aiming to gain a competitive edge. Understandably so, given that, about 84% of Indian consumers say they were most likely to procure from an organization that uses GenAI.

As we find ourselves on the brink of the vast potential of AI, it is immensely critical to approach the technology with awe and a certain amount of prudent skepticism. Let's face it – the big player in the game right now is OpenAI, holding a ton of power in its hands. And guess what? With great power comes great responsibility, and also the fact that concentrating such substantial power in one organization could raise some significant concerns.

Who can overlook the several lawsuits OpenAI struggles with from best-selling authors, citing valid concerns about copyright infringements?

In this background, one must ask how we will keep OpenAI in check. The concern extends to a broader community of researchers, tech companies, policymakers, corporations, and even end-users, all of whom should participate in this conversation.

Navigating the OpenAI Quandary

OpenAI, frequently leading the charge in AI research, has achieved noteworthy advancements. From creating language models capable of producing text similar to human language to solving global challenges, the organization has sparked a surge of technological progress. For countries like India, this has translated into positive outcomes with the adoption of AI technology, which is expected to propel GDP by a substantial $1.2-1.5 trillion over the next seven years.

However, the responsibility for developing and implementing AI does not rest solely on the shoulders of its creators. Instead, this calls for a collaborative effort involving a network of stakeholders, each shouldering their part of the responsibility.

Here's what each stakeholder must do.

Standardize Benchmarks

Researchers must collaborate to usher in best practices that collectively help tackle potential risks associated with AI. Research teams should work on setting up standard benchmarks for testing AI fairness and developing open-source auditing tools.

A Culture of Ethics

Next in line are the tech companies that are actively engaged in the implementation of AI technology. Tasked with deploying AI solutions across diverse industries, these companies are responsible for ensuring compliance with regulatory frameworks and designing AI products that minimize risks. On their part, developing strong ethical AI guidelines and cultivating a culture that emphasizes responsible AI within the company is of utmost importance. Companies should establish AI ethics committees accountable for overseeing AI projects, aligning them with company values, and ensuring transparency in decision-making processes.

Integrate AI-Governance

Conducting AI risk assessments to proactively identify and mitigate potential issues like data breaches, algorithmic bias, and compliance gaps is crucial. Organizations must integrate AI governance into their risk management and compliance strategies – GRC for AI, technically speaking. Essential to this process is collaboration with AI experts to formulate policies and establish processes prioritizing responsible AI adoption.

Balancing between Innovation and Regulation

Policymakers must foster an environment that encourages AI innovation while regulating its use to alleviate risk. Formulating resilient and adaptive regulations that promote innovation and protect public interests could be challenging. So, policymakers must collaborate closely with experts, tech firms and the general public to make well-informed decisions and tackle AI's ethical, legal, and societal implications.

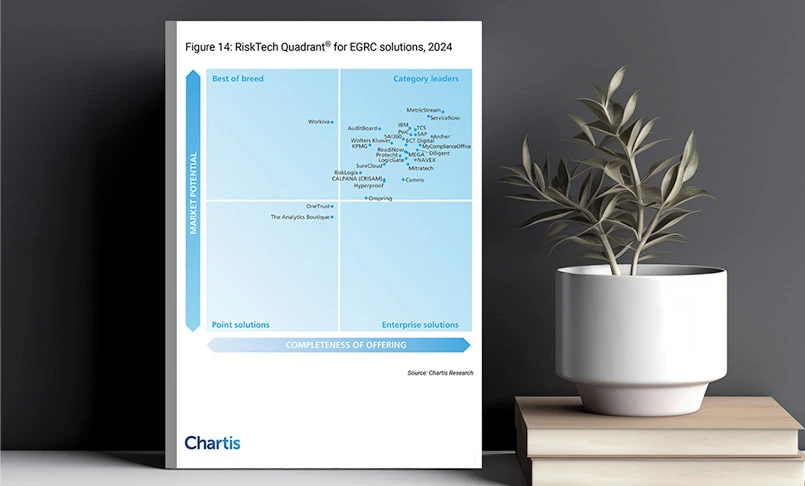

Approach to GRC

The fundamental practices of Governance, Risk Management, and Compliance (GRC) have consistently played a crucial role in the business world, ensuring ethical operations and adherence to legal boundaries for organizations. Nevertheless, using AI in diverse facets of business operations demands an evolution of GRC practices.

The GRC approach must take into account AI-related risks like data breaches, algorithmic biases and compliance-related issues. Organizations must create governance frameworks that supervise AI strategies, investments, and initiatives, such as aligning AI projects with organizational goals and ensuring transparency in decision-making processes.

The responsibilities and risks of AI should not rest solely on the shoulders of one company. Taming OpenAI or any such technology will require collaboration between researchers, tech firms, policymakers, corporations, and end-users. Additionally, these efforts must all be rooted in robust modern technology-enabled GRC practices to foster an environment of responsible innovation.

This blog was initially featured as an article on ET CISO. Read the original version here.

Find out more about MetricStream ConectedGRC. Request a personalized demo now.